by Richard L. Sawyer and Munther Salim, Ph.D. C.E.M., LEED AP, CDCEP, ATS

Data Centers are large, important investments that, when properly designed, built and operated, are an integral part of the business strategy driving the success of any enterprise. Yet the central focus of organizations is often the acquisition and deployment of the IT architecture equipment and systems with little thought given to the structure and space in which it is to be housed, serviced and maintained. This invariably leads to facility infrastructure problems such as thermal “hot spots”, lack of UPS (uninterruptible power supply) rack power, lack of redundancy, system overloading, high energy consumption and other issues that threaten or prevent the realization of the return on the investment in the IT systems. The solution is to fully understand the capacity of the data center to support “mission critical loads” (the IT infrastructure), the availability (“uptime” potential) of the data center, the energy efficiency and the condition of the key facility components and systems. Management then can make informed decisions based on facts, which leads to better direction of resources to close the known gaps between “what is” and “what should be” in systems reliability.

Sound management decisions rest on knowing:

A well designed facility infrastructure assessment provides the facts needed for informed decision making, which minimizes waste in the form of misdirected investment, unrealized investment returns, and over and under-sizing the facility infrastructure systems needed to support the IT infrastructure.

Many data center managers and operations directors do not know the capacity, utilization, or the availability of their data centers. This leads to the inability to:

Missing any one of these business drivers can range from “a career changing event” or lost opportunity to significantly contribute to the success in achieving a business strategy.

Supporting a change in the Business Environment:

A well designed and executed assessment supports the ability to respond to a change in the business environment. IT processing, systems and software are now an integral part of company offerings, either in distinct products (i.e., the software driving your digital camera), or services (i.e., on-line real time quotes from your car insurance company). The speed of business today is approaching real time, and change is the constant, not the exception. The ability to react to changes in the business environment quickly and effectively is not only a competitive advantage, but is required in this day and age. Understanding your capacity and utilization levels helps you understand your margin, which are the resources a data center manager needs to redirect and redeploy to effectively manage change.

Optimizing the IT Architecture:

Let’s face it, the cost of computing has gone down markedly. More bytes of processing can be obtained per dollar invested than ever before. With this drop in actual processing costs has come a concurrent increase in the thirst of companies for more processing as the software applications become key to business products and business efficiency, noted above. Instead of seeing a reduction in the size of IT platforms due to increased processing capacity in ever smaller footprints, the number of platforms and systems has increased to meet business demands. This has led to large amounts of equipment with very low utilization levels (10-20% is not uncommon), which means a lot of equipment is being used ineffectively and inefficiently. The solution has been to consolidate the applications on servers to drive up the utilization through “virtualization” and to refresh to equipment deployments which drive up processing capacity more efficiently. Knowing the ability of a given data center or data center space to support these consolidation and optimization efforts is key to realizing the value of the investment and attaining the project goals. A well designed and executed assessment will identify both the strengths of a data center facility infrastructure that can support the optimization project, and the gaps that must be closed as part of the project to insure success.

Meeting Mandates for Efficiency:

Going green is not just good business in the 21st century, it is becoming a corporate and, eventually, government mandate. Efficiency in a data center is ultimately the ratio of the electrical power being delivered to a data center at the utility service, to the electricity needed to run the IT infrastructure systems and platforms. This is where the real “work” of a data center is being performed. Knowing what the utility capacity is through a power survey, and how much of that is being used for just the IT equipment facilitates the ability to identify the systems which should be targets for efficiency improvement. If, for example, an assessment finds that the UPS system is significantly oversized for the size of the data center IT equipment load, and there are multiple UPS modules in the system supplying redundancy, the analysis of the data gathered in the survey might suggest shutting down one redundant module to achieve a higher efficiency. This simple step may be counterintuitive, but the management decision to do so would be supported by the facts discovered and analyzed in the assessment.

Realizing Return on Investment (ROI) in Projects:

Sound business practices drive investment decisions. IT systems and software represent significant allocations of company resources. Investments in projects to improve, upgrade or consolidate almost always have a return on investment (ROI) that justifies the management decision to approve the project. Frequently, the projects focus on one aspect, such as just the IT server investment, or the software upgrade, without considering the data center as an end-to-end information processing tool. Investments are justified and made on partial information, which can result in an unrealized return on the investment when the new equipment or systems cannot be supported adequately by the facility infrastructure. The result is equipment that operates in a degraded mode because it is too hot (not enough cooling capacity), or insignificant redundancy (no dual power source for dual cord loads), or insufficient space (no rack space that fits manufacturer’s requirements). Due to the unforeseen constraints, the result is loss of anticipated processing capacity, or additional investment to upgrade the facility infrastructure to meet the needs of the equipment, both of which act to drive down the ROI. A well designed and executed assessment provides the information for an accurate ROI because it will identify all the factors that need to be addressed in the investment to fully realize the anticipated benefit (return).

Elements of a Good Assessment:

Assessments are targeted to a multitude of concerns: IT architecture, software, energy efficiency, electrical power, cooling, security, maintenance practices, thermal and air management, etc. They all have common elements which should be clear when their Scopes of Work (SOW’s) are presented for consideration in purchasing or conducting an assessment service. These elements, all of which should be in the assessment report are:

Survey and data collection

Analysis

Recommendations

Survey:

A survey may take many forms but is essentially a review of information, systems or site conditions to establish known, valid facts on which to base the analysis and recommendations necessary for management decision making. This may involve a review and data collection from existing site documentation, equipment specifications, software capabilities, facility or IT infrastructure systems. Also, sample or comprehensive power and thermal measurements may be collected from infrastructure equipment. It is frequently conducted on site, though some surveys can be done remotely given the power of the internet and digital information processing (i.e., a survey of a facility building control system via remote access link). The results of the survey are the “findings” which are the facts that are objective and generally agreed to on which the analysis will be performed.

Analysis:

Once the survey establishes the facts they can be held up to the scrutiny of interpretation. As with any human endeavor, this is where variances are encountered since a useful analysis relies on the expertise and experience of the person or persons looking at the facts and drawing conclusions. Validity of the analysis is only assured if it is based on facts and an objective study by a qualified and experience professional. The expertise to do this is often found “in-house” (the staff and management of the data center) or can be outsourced to a qualified consultant, but in either case the implications of the facts as discovered in the survey must be understood and articulated in a useful manner. Through this process, gaps are discovered, qualified and quantified, as well as assets and attributes that may be leveraged to close the gaps.

Recommendations:

An analysis leads to identification of gaps, and the action plans to close the gaps constitute the recommendation phase of a good assessment. How extensive the recommendations are is dictated by the scope of work of the assessment. This may range from high level recommendations that provide global guidance for strategy making by management, to detailed gap remediation on a rack by rack deployment for IT service personnel. It may include, if specified in the scope of work, a matrix of actions that range from easiest to most difficult to implement, and cost to implement with attendant ROI calculations.

Assessments range in complexity and subject matter, but all strive to answer the same basic questions: What do I have? What can it do? Where can I direct resources to do the most good? For data center managers, these are key issues which are addressed through entry level assessment products like:

1.) A Basic Capacity Assessment –

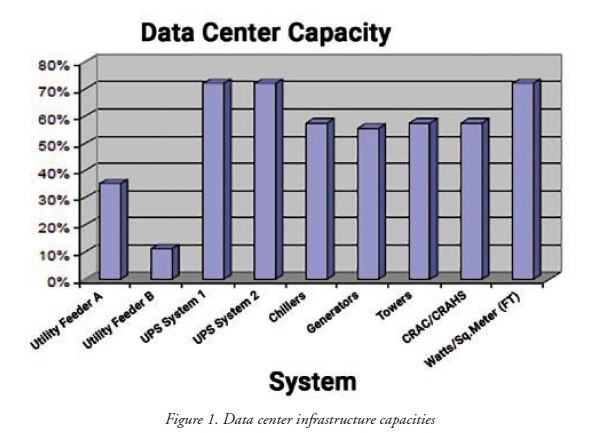

provides quantitative capacity and availability information that can help managers allocate the facility’s resources more effectivelyIdentifies the business strategy driving the data center.

2) Thermal Quick Assessment –

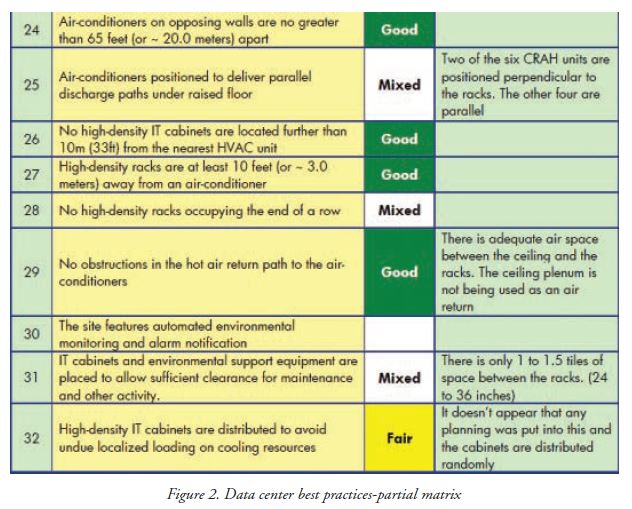

typically recommended for data centers experiencing thermal problems such as hot spots or preparing for high-density installations. Primarily is recommended for customers that are unsure about the air management and provisioning of existing cooling system. The assessment provides an evaluation of how the data center is adopting industry best practices such as that shown in Figure 2. Also, this assessment

3) An Infrastructure Condition and Capacity Assessment –

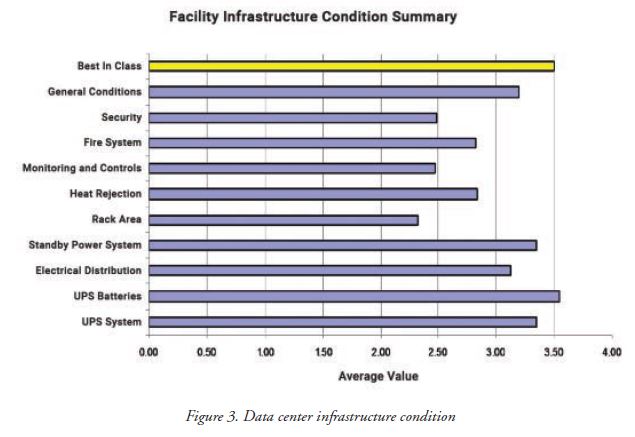

helps to understand the availability needs and how a facility infrastructure matches those needs by providing a system-by-system evaluation of the entire critical infrastructure systems.

4) Thermal Comprehensive Assessments –

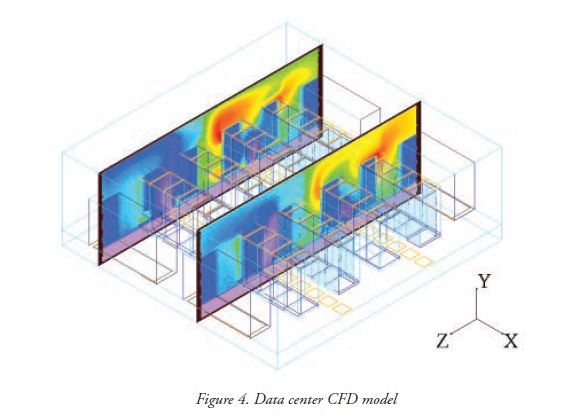

The essence of this service is a computational fluid dynamics (CFD) model that models the probable effects of potential cooling and air management improvements or the addition of new equipment or models the effects of failure scenarios. Also, it provides granular details such as graphical representation of the space area influenced by each air-conditioning unit. This insight helps to locate mission critical equipment in locations influenced by multiple air-conditioning units, potentially protecting IT equipment from the effects of overheating due to cooling or air management issues.

5) Energy Efficiency Assessment –

The explosion of digital content, big data, e-commerce, and Internet traffic is making data centers one of the fastest-growing users of energy. In addition, the increase in energy costs, public pressure due to environmental mandates, and procurement of high-power-density equipment is making the energy management of data centers a high priority. Whether it is a server room or enterprise data center or server farm that runs cloud computing; this service helps to manage the energy efficiency by calculating baseline energy efficiency and green-house gas emission metrics, identifying mechanical, electrical and operational issues in the facility and developing future state roadmap of conservation measures and their ROI.

Gathering facts, analyzing them and developing recommendations is an expensive process, whether done internally or through consultants, because it involves high value human activity. While most managers focus on the hard dollar costs since it directly influences budgets, there are soft costs that must be accounted for when using internal staff. The individuals most apt to bring validity and value to an internal assessment are most often the most experienced technical managers who also tend to have heavy and important workloads. Diversion from daily duties bears three (3) costs: the direct cost of accounting for their time, the time of persons charged with executing their responsibilities while they work on the assessments, and the opportunity cost of their contributions while not being in their regular roles. These costs, while seemingly obtuse, are real and a decision to conduct an assessment either internally or through a consultant should take them into account. Frequently when these costs are weighed against the cost of obtaining an assessment through an external source, the decision is made to avoid the potential internal disruption and go with a consultant having the required expertise.

Consultants fees for conducting assessments reflect the hours of expertise needed to obtain and analyze the information, and to produce the report with recommendations. It frequently includes travel hours, administrative support costs, and the facilitation costs of running any business (i.e., IT, management, finance and accounting, etc.). Cost typically ranges from $150 to $300 per hour depending on the expertise of the assigned professionals which translate into typical assessment costs which range from $10,000 for basic reports to well over $100,000 for complex assessments with very detailed costing and implementation recommendations.

Weighed against the cost of performing an assessment through consulting resources, are the benefits, tangible and intangible:

1) The “as-is” state of the data center is known.

Utility capacities and efficiencies, existing load levels, margins for system expansion are defined. Vulnerabilities (Single Points of Failure) are understood leading to the development of risk mitigation planning or the design of projects to reduce the threat of failure.

2) The capabilities of the data center are known.

The capacity to support IT systems and operations is understood, along with any gaps that must be addressed to support existing or planned equipment. The chances of investing in unsupportable equipment, or systems, that will operate in a degraded mode are reduced or eliminated through addressing gaps in a methodical manner. The availability of the data center is defined and gaps between the availability as required by the business strategy and the availability inherent in the data center design are quantified. Projects can be designed to address better availability and increased capacity based on real information.

3) Investments in improving the availability, efficiency and the capacity can be directed to those areas that will yield the greatest return.

Operating from a factual base with clear analysis reduces guesswork and speculation and allows for a “best practice” solution for that particular data center supporting the assigned processing loads. True ROI can be defined because all the gaps and variables have been identified through a careful analysis which leads to cost effective recommendations.

Given the cost of data centers ($750-$3000 per square foot), and the value of the IT equipment they support (3X-5X the data center cost), the potential cost of misplaced investment is enormous, frequently in the tens of millions of dollars. Against the risk of investing in the wrong place, or at the wrong magnitude, an assessment is a good investment both in hard dollar value and in the intangible benefits as well. Reducing operational stress, moving from a reactive to a proactive management process, directing focus to problems that have high potential impact on business success all are intangible benefits that ultimately lead to tangible results. A well designed and executed data center assessment is the foundation for accruing these benefits.

Sound decision making in data center management is the key to realizing the success of a business strategy. Decisions made objectively, based on valid facts and thorough analysis, yield strong results by directing resources where they can achieve the greatest positive impact for the minimum expenditure or effort. Assessments are a powerful management tool in obtaining those facts, analyzing the gaps between “what is” and “what should be”, and developing action plans to close those gaps by directing resources efficiently. In this manner, the goal of attaining the maximum level of availability is achieved with a thorough knowledge of the capabilities of the data center to adapt to change when driven by a relentless business environment. Assessments are good investments.

Project & Goal Statement

Client requested to carry out a detailed energy efficiency assessment to develop a retrofitting roadmap aiming at lowering the energy spending and achieve the energy efficiency targets of ENERGY STAR certification for this Tier III-45,000 sq. ft.(4500 sq. m.) raised floor area data center.

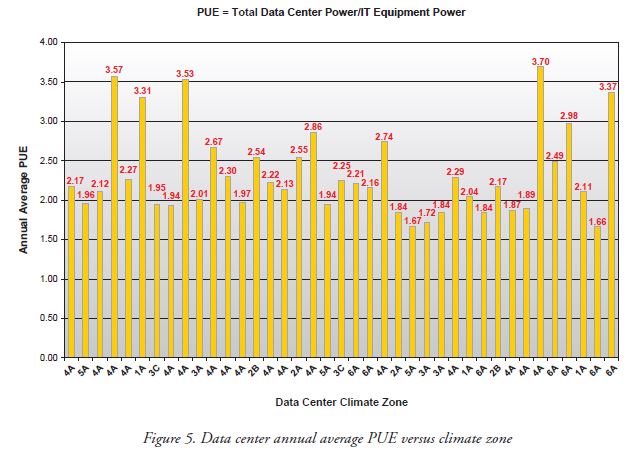

As a result of conducting level III-energy audit, the baseline PUE of the data center was determined to be 1.60. Although this was considered one of the low PUEs achieved when compared to our database of 200+ facilities and when compared to DOE/LBNL benchmarking data, several energy conservation measures were identified that could reduce the energy use and associated cost/emissions. One challenge identified early on was that the client’s energy rate was really low, hence the team was not sure if the financial analysis would result in a payback of less than 5 years per client’s criteria.

Findings & selected recommendations

As a result of the on-site data gathering, the assessment team concluded the following:

Several “what if” scenarios were then analyzed and the following measures were selected for implementation:

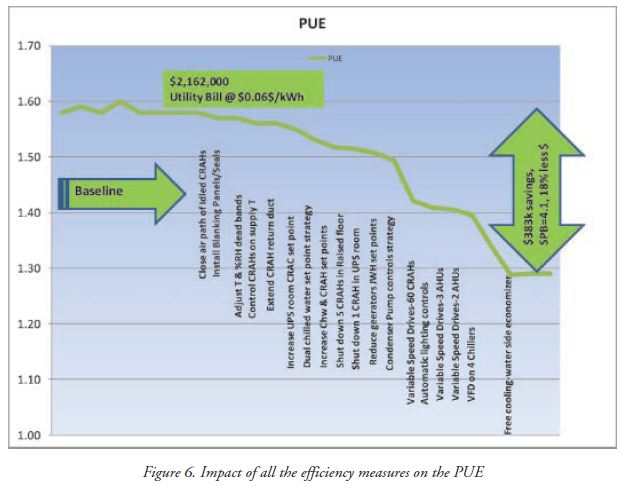

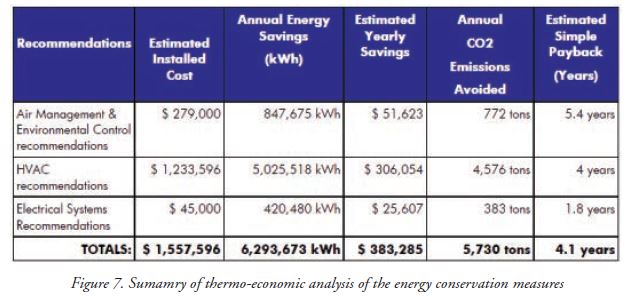

As shown below (Figure 6), the selected feasible energy saving measures resulted in an annual cost savings of $383,285 and an annual energy savings of 6,293,673 kWh. This represented an 18% reduction in the annual average PUE and utility costs. Additionally, this meant a reduction of 5,730 Tons of CO2 emission. The total local utility incentives were calculated to be $165,576. The table below (Figure 7) summarizes estimated savings for various measures with relatively short payback times.

Richard L. Sawyer is Strategist/Director Commissioning and Operational Consulting at Hewlett-Packard Enterprise. He can be reached at [email protected].

Munther Salim, Ph.D. C.E.M., LEED AP, CDCEP, ATS is Strategist/Director, Assessments and Energy Services at Hewlett-Packard Enterprise.

He can be reached at [email protected].