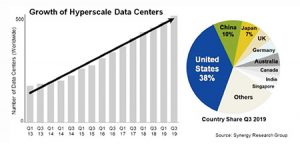

Hyper scale builders, colocation builders and enterprise data center builders are spending billions of dollars per year on buildings and servers with indications this growth will continue. There are 2 main reasons why they are spending this much money. The first is because we need more storage space for our pictures, our music, our gaming, our cloud-based software, our internet-of-things and of course our cat videos. The second is because it is profitable. They spend billions of dollars on construction costs and they make billions of dollars in profit.

Hyper scale builders, colocation builders and enterprise data center builders are spending billions of dollars per year on buildings and servers with indications this growth will continue. There are 2 main reasons why they are spending this much money. The first is because we need more storage space for our pictures, our music, our gaming, our cloud-based software, our internet-of-things and of course our cat videos. The second is because it is profitable. They spend billions of dollars on construction costs and they make billions of dollars in profit.

Yes, the future of data centers looks bright, but the construction type of data centers is the real question, not whether the building trend will continue. The question is what form of construction will be done, hyper-scale large, large, medium or 5G small. For all stake holders, the future is wide open.

COVID-19

Covid-19 has changed the American and indeed the World’s business landscape. Data centers have benefitted from and have been hurt by the Covid-19 mitigation and prevention measures. The hurt is that the virus can possibly infect so many people, including data center technicians, that the rack buildout and telecommunications connections have been slowed down. Data center construction requires hundreds of experienced personnel for layouts, installation, construction and commissioning. When any one of these sequences are infected, the project is affected with reduced manpower, extended construction schedules and increased costs.  The benefit of Covid-19 on data centers is that many people are staying at home working, playing or shopping online. Working means using the cloud that is full of business applications, including email, virtual meetings like Skype, Microsoft Teams or Google Meet, and of course on-line schooling. Engineers, such as Salas O’Brien, AECOM, and Kw Mission Critical have had the ability to work remotely, which they are doing a lot of now and will do so for the foreseeable future. Playing means video games like Xbox, Play Station and Video Poker, as well as watching movies on Netflix. Shopping, of course, means Amazon, Kroger, and Home Depot. Tele-comm data centers, like Verizon, AT&T or T-Mobile are also benefitting from an increase in conference calls, emails and texting, as well as data usage. With more people going online and staying for longer periods of time, more data center capacity is required, now.

The benefit of Covid-19 on data centers is that many people are staying at home working, playing or shopping online. Working means using the cloud that is full of business applications, including email, virtual meetings like Skype, Microsoft Teams or Google Meet, and of course on-line schooling. Engineers, such as Salas O’Brien, AECOM, and Kw Mission Critical have had the ability to work remotely, which they are doing a lot of now and will do so for the foreseeable future. Playing means video games like Xbox, Play Station and Video Poker, as well as watching movies on Netflix. Shopping, of course, means Amazon, Kroger, and Home Depot. Tele-comm data centers, like Verizon, AT&T or T-Mobile are also benefitting from an increase in conference calls, emails and texting, as well as data usage. With more people going online and staying for longer periods of time, more data center capacity is required, now.

DATA CENTER SIZE

We have seen regional data centers grow in size from 16 mW to 25 mW to 50 mW to 150 mW. We know this trend is continuing up to 300+ mW. These large hyper scale size data centers are needed to keep up with capacity because one large 350 mW center is ‘easier and less expensive’ the seven 50 mW data centers. There are several issues with hyper scale large data centers. One is they can exceed the air permit requirements allowed by the EPA, which then requires a noncontagious campus. Two is that to have a ‘redundant backup’ data center requires another hyper scale large data center. A third issue is location. For instance, Northern Virginia is a unique internet hub where data centers do not have to be located at, but many chose to be because of connectivity. While Ashburn / Loudon County, VA is known as the hub of the internet, the demand is absolutely astronomical in that region. One reason is because in 2018, the Marea under-sea fiber optic cable began operating between Spain and the US state of Virginia, with transmission speeds of up to 160 terabits per second—16 million times faster than the average home internet connection. We are seeing this hyper scale large trend in many areas throughout the country and expect this trend to continue another 8 to 10 years.

THE SPEED OF 5G

The promise of 5G speed cell phone usage did not happen overnight, in fact it did not happen over a two to three-year period. A 5G antenna has a much smaller range than a 4G antenna, in fact 5G will require 5 times as many antennas than 4G. Urban areas are the first, and probably the only area, to get the promised 5G speed. Actually, 5G will not be a promise kept while you are inside a building as the walls, windows and ceilings degrade the 5G speed considerably.

Speed, as we know, is an important factor for data usage. The closer a data center is to the user, the faster the connection is. That said, you can’t have a hyper scale data center on every corner, but you can have an edge data center at select points that can greatly increase the upload/download speeds to a cloud-based data center. Cell towers, near the customer point of use, will have small edge micro-data centers which increase connectivity for speed sensitive operations like financials, streaming and online gaming. Several companies, like EdgeMicro, are building and installing these 7-14 rack container based data centers to enhance our internet experience. The edge data centers or super-nodes will be required for the eventual increase in internet connectivity.

OPERATIONS

The majority of the ‘hyper-scale’ companies who own and operate the large data centers prefer to manage or be actively involved in the construction of their data centers. When they have a large demand for rack space, which many of them do, and they can’t build their data centers fast enough they have to have a Plan B, which is leasing space from a colocation facility. As one of the larger hyper scalers say, CQA (Cheap, Quick and Available) is the name of the game. Until the Q element (Quicker) is resolved, many hyper scalers who have that demand are leasing space from colocation facilities, which have space available in their ‘speculative’ data centers. This is not a decision made lightly, as most lease commitments range from 10-15 years in length. This trend is expected to continue, which is good for the hyper scalers, like Microsoft, Google, Facebook and Amazon, as their demand continues to grow and for the colocation companies, like QTS, Cyrus One, Compass, Digital Realty and Raging Wire, who build speculative colocation facilities and fill them up with these large customers, as well as many smaller ones.

Data Center Operations is typically managed by a company who started out in the data center business, but developed expertise in management and operations, like Schneider, T5, and BGIS. The reliability of data centers is required to be high, to the point of no failures are allowed. As each company has their own design for reliability, which may be similar to other companies, this design gets revised and refined on each new facility that is built. This ‘refinement’ accomplishes several things, which are higher reliability, quicker speed to market, less complex and overall lower cost. If these new ‘refinements’ can be implemented on previous built out projects without construction or equipment modification, they usually are. As more data centers will depend on containers, automation, cloud, edge computing, and other technologies that are—simply put—open to the core. This means Linux will not only be the champion of the cloud, it’ll rule the data center. Operating systems like CentOS 8, Red Hat, SLES, and Ubuntu are all based on or around Linux and will see a massive rise in market share by the end of the year.

Data Center Operations is typically managed by a company who started out in the data center business, but developed expertise in management and operations, like Schneider, T5, and BGIS. The reliability of data centers is required to be high, to the point of no failures are allowed. As each company has their own design for reliability, which may be similar to other companies, this design gets revised and refined on each new facility that is built. This ‘refinement’ accomplishes several things, which are higher reliability, quicker speed to market, less complex and overall lower cost. If these new ‘refinements’ can be implemented on previous built out projects without construction or equipment modification, they usually are. As more data centers will depend on containers, automation, cloud, edge computing, and other technologies that are—simply put—open to the core. This means Linux will not only be the champion of the cloud, it’ll rule the data center. Operating systems like CentOS 8, Red Hat, SLES, and Ubuntu are all based on or around Linux and will see a massive rise in market share by the end of the year.

Quantum computers are still in the early stages of development, but they will have significant impact to the data center of the future. They will begin the development of new breakthroughs in science, AI, medications and machine learning to help diagnose illnesses sooner, develop better materials to make them more efficient and improve financial strategies to help us live better in retirement. The infrastructure required by quantum computers is hard to build and operate as they require temperatures near Absolute Zero, i.e. -459 degrees Fahrenheit. IBM Quantum has designed and built the world’s first integrated quantum computing system for commercial use and it operates outside the walls of the research lab.

As our demand for data storage continues to grow, solid state drives, SSD, will be the preferred data storage method which will eventually replace traditional drives. SSD’s are faster, can store more data and are more reliable. The change to solid state drives will allow the same rack space to store a much greater amount of data. The data explosion happening now from AI, IoT, cat videos and other business applications will quadruple the amount of current data storage requirements in 5 years.

CONTAINERIZATION

Webster says containerization is a noun defined as a shipping method in which a large amount of equipment is packaged into large standardized containers.

Containerization increases the speed to market, increases the quality since ‘construction’ is now done in a factory setting and allows most level 4 functional commissioning activities to be done off site with minimal level 4 commissioning done on site, along with the level 5 integrated systems testing. Containerized equipment offers many advantages, notably a lower cost to market and quicker speed to market. The successful containerized design reduces the engineering costs, the construction costs, the commissioning costs and the operational costs of the equipment. The use of containerized solutions will increase, evolve and continue to be utilized. Containerization will move most of the level 4 commissioning process to the left in the overall construction schedule. Also, since less construction work is required on the job site, it will reduce trade stacking in many areas, making it a safer job site.

The pursuits of hyper scale builders are quick to market, a lower cost per mW and higher quality. These 3 requirements require pre-loaded standardized containers to be built in a factory, where quality is highest and the work is dedicated to the container assembly, resulting in a higher quantity and quality of constructed equipment. In addition, the factories are usually built near urban areas where an experienced and trained work force is available.

Modular data centers will be the key going forward, at least for colocations. Modular and scalable will be required for the hyper scale developers. The sweet spot for colocations will vary from 4 to 8 mW, hyper scalers will be the same, but scalable, as in building a 64 mW building complete with infrastructure, but then adding the equipment in 8 mW building blocks. The large buildouts require a lot of available power, but connectivity is also a limiting factor. The use of higher strand fiber counts, like 3456 and 2880, which are typically armored and used outside, maybe used inside, but current limitations like bending radius will have to be resolved. When the higher count fibers are used inside, the data capacity will go up exponentially. Data capacity is required for the data racks. The build out of racks can also be done in a modular format. Data center rack build out and testing is a natural evolution done previously in telecommunications.

SPEED TO MARKET

Speed to market is number two on the data center priority list, right behind safety, with cost being number three. The time frame from breaking ground to lockdown has been greatly reduced by using site adaptions and containerization. Modular construction, i.e. equipment constructed on skids in a factory, completely wired up and tested has increased the speed to market, similar to what McDean Electric has been doing for years. However, it is still not fast enough. The existing data center demand far outweighs the buildings currently in construction and in design. For hyper-scale, what used to be 18 months has been reduced to 12 months, with some smaller colocation facilities approaching 6 months. This reduction in time is due to the factory commissioned modules and containers being built off site then shipped to the project for connections and final commissioning, which tests the equipment, typically using load banks, such as Simplex, at its full design load.

Yet, many developers still go with the less expensive, i.e. less experienced, data center contractors and sub-contractors who have to go thru the ‘data center learning curve’ which adds both time and cost to the project. As this industry continues to mature and developers spend their money on experience, standard designs and containerization, they will realize they can get an acceptable speed to market with high quality contractors and an experienced labor force for construction and commissioning.

MULTI-STORY

Multi-story data centers have been around for some time now, mostly in re-purposed office buildings which have been converted into data centers in land scarce cities like Singapore, Hong Kong, London, New York and Atlanta. Due to the ‘small’ foot-print of these buildings, they are typically the colocation facilities. The electrical infrastructure and chillers are typically on the lower levels with the roof top being reserved for cooling towers. That leaves the middle floors for the racks and servers. Purpose built multi-story data centers can be and are being built with stairs, elevators, mechanical rooms and electrical rooms outside the building footprint, which makes the entire floor plate leasable for data center operations. Even in data center rich ‘rural’ areas, like Ashburn in Northern Virginia, the price of land has escalated to north of $2M per acre, which makes multistory data center construction a necessity. The preference is to stay single story, but total cost, safety and square footage requirements drive the need and play a large role in those decisions.

POWER

Data centers use a lot of power, so how efficiently they use that power is important. Data Center PUE will become more important as more data centers are built. By 2025, it is estimated that data centers will use 4.5% of the total energy used throughout the world. Power Usage Effectiveness (PUE) is the number used for this benchmark. PUE is the Total Building Power/ the IT Server Power, so the lower the PUE the better or more efficient the building is. An efficient data center ranges from 1.2 to 1.3 PUE, but with the use of total immersion, water cooled racks or high-density multi-blade servers, the PUE can be as low as 1.06 to 1.09. To get this low PUE, the initial infrastructure is more expensive, but the energy savings can be substantial, year over year. Other factors that have reduced energy usage are the increased use of LED light fixtures on motion sensors, like the Lightway edge-lit fixture from CCI, and modern electrical/server/ rack equipment which has a higher temperature and humidity range, some going as high as 90 degrees F and 90% humidity. The use of a higher set point allows the ‘free cooling’ in many areas. We all know the concept of ‘free’ isn’t quite true. While the use of ‘cool’ outside air as makeup-air for the colo spaces does save energy by not requiring the use of hvac compressors, it does require power to operate the fans and water pumps for use in the adiabatic system. Energy savings, yes; free, no.

The availability of power is a concern for the hyper-scale data centers. Many large-scale operators do not utilize the benefits of solar at the site, even though roof top space is available. In lieu of having to increase design and construction costs to re-enforce the structure and roofing systems, they choose instead to buy and or fund large scale offsite solar projects or wind turbine projects to reduce their impact on the environment. The large data center campuses require a big financial and resource commitment from the power companies. If the data centers include large solar systems on their roof or their property, they are basically telling the power companies “we need you at night, but not during the day”, which does not sit well. So, they typically choose to fund offsite projects, similar to what Microsoft did in 2019 with ENGIE in Texas, where they purchased 230 mW. This purchase brought Microsoft’s renewable energy portfolio to more than 1,900 mW.

The availability of power is a concern for the hyper-scale data centers. Many large-scale operators do not utilize the benefits of solar at the site, even though roof top space is available. In lieu of having to increase design and construction costs to re-enforce the structure and roofing systems, they choose instead to buy and or fund large scale offsite solar projects or wind turbine projects to reduce their impact on the environment. The large data center campuses require a big financial and resource commitment from the power companies. If the data centers include large solar systems on their roof or their property, they are basically telling the power companies “we need you at night, but not during the day”, which does not sit well. So, they typically choose to fund offsite projects, similar to what Microsoft did in 2019 with ENGIE in Texas, where they purchased 230 mW. This purchase brought Microsoft’s renewable energy portfolio to more than 1,900 mW.

Data Center funded renewable energy projects will increase in quantity to help offset the carbon foot print they are placing on the Earth. The renewable energy projects will mainly be solar panels and wind turbines. The hyper-scale operators have a goal of 100% of their energy to be renewable. Google has already reached that goal.

THE FUTURE OF DATA CENTERS

The future of data centers is as vast as our imagination. New products will be invented, new techniques will be developed and new requirements will be ‘required’. Data centers of the future will include some or all of the following:

Total Immersion of the servers into thermally conductive dielectric fluids to reduce cooling requirements. A piping infrastructure with pumps will be required, but will result in less fans, less compressors and reduced power usage.

Deep under-water data centers to use the natural cooling effects of the ocean. The size of the data centers will be limited, will be containerized and will be very efficient, with a lot of free cooling.

Large Fuel Cells in parallel, like Bloom Energy, will be used to power entire buildings or smaller ones, like Ballard, may be used above the IT racks to power just that individual rack. A natural gas infrastructure would be required for this innovation.

Industrial Waste Water (IWW) from cooling towers will be going to storm sewers, not sanitary sewers. Currently, this ‘waste’ water has to be processed by the waste treatment plants. This will not only help the environment but reduce costs to the project and reduce the costs to the local entity providing water treatment.

Natural Gas generators to reduce the level of emissions, as compared to diesel generators. This will require a natural gas infrastructure and it will increase the ‘tank’ runtime from a typical of 72 hours to ‘indefinite’.

Water cooled racks and chips. The thought of bringing water inside a data center to the racks was ‘unheard of’ at one time, but now it may be required. With higher density racks, it has to be cooled with un-conventional, but proven and tested means. There are some very high power/high capacity chips, typically AI, that require water-based or liquid cooling to the actual chip. Higher education data centers, such as Georgia Tech, are using these rear door cooling techniques now. Water cooled, high powered, high density chips, such as those by Cerebras, will raise the power required at each rack, up to 60 kW per rack. Google and Alibaba are already using water cooled racks, with others soon to follow. How to recycle the heat from these servers will become

someone’s Golden Ticket.

Higher density racks, 50 kW per rack being used by higher education data centers, like Georgia Tech. The high-density racks with cooling to the racks has been done in the past, but is now becoming more commonplace.

Construction & Design: Data centers used to be built like a regular building, stick by stick onsite. Now, a lot of equipment is ‘stick-built’, then some modular, now modular with some containerization and scalable, going containerized and scalable.

SUMMARY

Data center construction has changed over the last 2 decades. It slowed drastically after the dot-com boom-to-bust, but has increased substantially since 2010. The growth rate of 15% year over year is expected to continue for the next 8-10 years. Data Center CQA (Cheap, Quick, & Available) can only be achieved by containerization utilizing experienced engineers, contractors, sub-contractors and commissioning agents. Data center developers apparently believe in a line from one of my all-time favorite movies, Field of Dreams, “Build it and They will Come,” close enough.

Tim Brawner, PE, CxA, LEED AP, EMP is National Commissioning Operations Manager at Salas O’Brien. He can be reached at [email protected].